Pimoroni Pihub Review

Posted: January 31, 2014 Filed under: Linux, Raspberry Pi, Windows | Tags: hub, pihub, pimoroni, raspberry pi, review, rpi Leave a commentPiHub By Pimoroni

As some of you know, I dabble on an off with the Raspberry Pi. As a casual user, I use my pi mostly for playing with linux and the odd gaming session. The Pi is a fun little computer, so long as you don’t expect to much from

it. As an indie games platform it offers a lot of fun, so much that I have even felt myself being drawn to writing a program on the tiny computer.

Anyone who has used a raspberry pi for any length of time, will know that cables can soon start to mount up. It’s amazing that such a tiny computer can take up so much room. The RPi can work fine as a stand alone computer, but start adding a wifi dongle, keyboard, mouse, USB memory stick, card reader and suddenly you’ve run out of USB ports. There are solutions to get around the RPi’s two usb ports, but none of them are simple or straight forward as buying a powered USB hub. First and foremost if you buy a hub for your RPI, you have to get one that comes with its own power supply.

On early models of the RPi, the USB ports were fitted with polyfuses designed to protect the tiny computer from devices that might try and draw too much power, such as external hard drives or web cams. Later models did away with the two fuses and now the RPi has just one fuse. While the latest design has improved matters, you are still stuck with just the two ports which is where a power hub like the Pihub comes in, alleviating your power woes and freeing you from two port hell. The Pihub is the creation of Pimoroni, the UK company that also brought us the Picade. Their website offers supplies to a wide audience of tinkerers, modders and electronic hobbyists. One of their recent offerings to the RPi community was the Pihub, aptly named as the housing of the Pihub is in the shape of the Raspberry Pi emblem. Adorned with green leaves and red berry colours, as hubs go it is by far the cutest I’ve seen. The case is but one cool feature of this little device, and the hardware inside is pretty impressive as well. When buying your Pihub, you have the option to opt-out of buying it with the accompanying power supply. While this might seem like a good way to save money, I would recommend spending the extra money for the PSU as it is well worth the money. Rated at 5.2 volts and 3mA/h, it is more than capable of powering the RPi along with anything else you might want to throw at it. Struggling with external CDROM drives and USB hard drive are a thing of the past.

Unimpeded by polyfuses like the RPi, the Pihub offers the full USB 2.0 package, with a multi TT (transaction Translator) chip for bringing USB 1.1 devices in line with the high bus speeds of USB 2.0. Some devices use only

one TT chip, sharing a single 12mb/s data channel amongst several USB ports, which can significantly impede your performance and lead to a bottle neck effect, unlike the Pihub which has been designed to provide high performance. Designed with 4x USB ports, one of which is specifically engineered to power your Raspberry Pi

computer. Providing a dedicated 1.1 Amp supply, it means no longer needing two separate power supplies, you can run everything from just the one psu. For me this is a massive selling point because I found the increasing number of bits i needed for my Pi really annoying. My desk has been turned from crazy cable jungle to almost down right respectable. While yes, powering the RPi from the Pihub does mean your taking up one of the ports. You’re still left with three full USB 2.0 standard ports as well as the spare port on your RPi. Overall I think the trade-off it worth it.

Speaking with Paul Beech from Pimoroni, he informed me the Pihub had specifically been designed with high quality chips to guarantee 100% compatibility with the RPi. This is no doubt due to the number of cheap hubs on the market, that are less then RPi friendly.  In an odd turn of events, I actually observed how compatible the Pihub really was in general. After plugging my wifi dongle and mouse in to the Pihub, I connected it to a Windows XP machine. On booting, XP didn’t even ask for drivers, instead logged me straight on to the local area network through the wireless adaptor. I’ve seen few hubs work this seamlessly. High praise has to go to the chaps at Pimoroni. In conclusion, the Pihub is well worth the £20 if you’re on the market for a decent usb hub for your RPi or PC in general. 10% of profits are given back to the Raspberry Pi Foundation, who use the money to help educate future generations of geeks.

In an odd turn of events, I actually observed how compatible the Pihub really was in general. After plugging my wifi dongle and mouse in to the Pihub, I connected it to a Windows XP machine. On booting, XP didn’t even ask for drivers, instead logged me straight on to the local area network through the wireless adaptor. I’ve seen few hubs work this seamlessly. High praise has to go to the chaps at Pimoroni. In conclusion, the Pihub is well worth the £20 if you’re on the market for a decent usb hub for your RPi or PC in general. 10% of profits are given back to the Raspberry Pi Foundation, who use the money to help educate future generations of geeks.

Till next time, keep on geeking!

The Pihub can be found via the Pimoroni store at

http://www.pimoroni.co.uk

A Look Back At Robocop For Original Xbox

Posted: January 19, 2014 Filed under: Retro gaming, Uncategorized | Tags: review, robocop, titan software, xbox Leave a commentIn a recent update you might have seen I had bought two new games for the original Xbox; Robocop and Dungeon & Dragons: Heroes.

One of these games turned out to be a great buy, while the other one was a massive let down. Can you guess which was the winning game? Well if you where wishfully hoping it was Robocop, you’re bang out of luck. Seldom have I had the misfortune to play a game so full of promise, which instead manages to disappoint at every opportunity. I would even go as far as to say ET on the Atari 2600 is better, purely for the comic value. Yes that’s right, you heard me invoke the name of ET!

Produced by Titan Software, Robocop looks like it has the potential to be a good game, that is, until you boot up the Xbox and take one glance at the menu. The options are sparse and the music is, well, terrible. It reminds me of an old 90s dance tune. I would highly recommend anyone to select the inverted controls before attempting to play a level. I’d say it makes the game easier to play, but then I’d be giving the game more credit then it deserves.

The games comprises of eight levels, each made up of sections Robocop must traverse while attempting to complete his primary and secondary objectives. The levels in Robocop are huge, and I mean ridiculously so. It can take up an hour just to finish one level and that’s if you know what your doing. This might be bearable were it not for the fact the game does not have mid level saving. So the only way to save your progress is by finishing the whole level. It is almost unthinkable why the developers did this on a console fitted with a hard drive. Back in the days of the NES, Amiga it was common practice, but then levels did not take a mind-numbing 50 minutes to complete.

Now a word on the levels. As I’ve already stated, they are annoyingly extensive. They are also teeming with bad guys with terribly AI, who are armed to the teeth and take 6-8 shots to kill. Ammo is always in short supply, add to this the fact Robo’s weapons, of which he has 3-4, are pretty feeble. You’ll find yourself dying a frustrating number of times. Enemies also have the unfair advantage of shooting through walls and being able to shoot from jokingly long distances. Who needs a hunting rifle with night scope, when you can pop off shots with a pistol from half a mile away? As I mentioned the AI is laughable, play Halo or Ghost Recon and then play Robocop, you will feel the urge to laugh and probably cry. Another factor to the levels is the difficulty, which goes from passable on level one, to absurdly hard on level two, which also happens to be the biggest level of the entire game. You will be confronted with a limited supply of ammo which is dwarfed by the number of enemies that are scattered around the environment. Half of your time will be spent trying to find ammo or energy, yes Robocop is no longer impervious to bullets like in the movies. He now must replenish his energy at regular intervals. I can understand the logic behind this, I really can, an impervious Robo would be like running

number of times. Enemies also have the unfair advantage of shooting through walls and being able to shoot from jokingly long distances. Who needs a hunting rifle with night scope, when you can pop off shots with a pistol from half a mile away? As I mentioned the AI is laughable, play Halo or Ghost Recon and then play Robocop, you will feel the urge to laugh and probably cry. Another factor to the levels is the difficulty, which goes from passable on level one, to absurdly hard on level two, which also happens to be the biggest level of the entire game. You will be confronted with a limited supply of ammo which is dwarfed by the number of enemies that are scattered around the environment. Half of your time will be spent trying to find ammo or energy, yes Robocop is no longer impervious to bullets like in the movies. He now must replenish his energy at regular intervals. I can understand the logic behind this, I really can, an impervious Robo would be like running around with the cheat mode permanently switched on. However the balance of enemies vs supplies is tipped against the players favour even in easy mode. I played Robocop for several days to prepare for this review and I assure you it was a struggle. I found myself torn between my love for Robocop the character and wanting to find some good in the game and the fact the game sucks.

around with the cheat mode permanently switched on. However the balance of enemies vs supplies is tipped against the players favour even in easy mode. I played Robocop for several days to prepare for this review and I assure you it was a struggle. I found myself torn between my love for Robocop the character and wanting to find some good in the game and the fact the game sucks.

I’m not even sure it is worth covering the sound effects, which are terrible (big surprise!). You will be treated to some of the most cringe worthy one-liners. With Robocop uttering such unfamiliar phrases as “Oh yeah” and “Bullseye” the voice acting for Robocop is terrible. From what I gather it was done in house, with the developers lending their voices for several if not all the characters. Like the rest of the game, the sound is just appalling.

The graphics are sadly nothing to get excited about, resembling something that would look more at home on a Sony Playstation 2. For the original Xbox they are surprisingly bland, colourless and poor. Levels suffer with video glitches, flickering and characters becoming trapping inside walls. Robocop has several view modes, one of which is the almost completely useless thermal vision. In theory it gives you the ability to see enemies through walls, in practice is barely comes close to performing as intended. Half the time it will not show anything useful, leaving you to stumble across enemies.

The graphics are sadly nothing to get excited about, resembling something that would look more at home on a Sony Playstation 2. For the original Xbox they are surprisingly bland, colourless and poor. Levels suffer with video glitches, flickering and characters becoming trapping inside walls. Robocop has several view modes, one of which is the almost completely useless thermal vision. In theory it gives you the ability to see enemies through walls, in practice is barely comes close to performing as intended. Half the time it will not show anything useful, leaving you to stumble across enemies.

Playing Robocop is an exercise in futility, which leaves you feeling annoyed, frustrated, and betrayed. Mostly because the box cover looks deceptively good, leading you to think the game will be as well, that is until your take it home and try playing it on your Xbox. Ten minutes in and the illusion evaporates faster than water on the pavement during a heat wave. This is one game to neglect from you collection. Playing it will result in the overwhelming desire to throw your controller at the television. So my advice would be to not play it, or buy a back up TV.

Star / Alps S9920 Screen Repair

Posted: January 14, 2014 Filed under: Android, Handheld | Tags: alps, digitizer, repair, s9920, screen, screen fix, star 9920 15 CommentsIs Your Phone Acting Up?

A week ago I wrote a review of the Alps / Star S9920, in which I highlighted some of the highs and lows of buying this popular S3 mini clone. Shortly after after writing the article I began receiving messages from fellow S9920 owners, who were also struggling with their devices. While I still don’t have a solution to all the problems that have been put to me (and I did look) there just isn’t that much documented on the S9920. This isn’t much of a surprise, when you think of the number of phones that come out of China. Unlike major brands such as Samsung, HTC or Apple, Alps constitute but a footnote in the Android device market.

A week ago I wrote a review of the Alps / Star S9920, in which I highlighted some of the highs and lows of buying this popular S3 mini clone. Shortly after after writing the article I began receiving messages from fellow S9920 owners, who were also struggling with their devices. While I still don’t have a solution to all the problems that have been put to me (and I did look) there just isn’t that much documented on the S9920. This isn’t much of a surprise, when you think of the number of phones that come out of China. Unlike major brands such as Samsung, HTC or Apple, Alps constitute but a footnote in the Android device market.

In this article, I’ll try to address one of the main issues experienced by users of the S9920: ghost touching. It manifests itself in the form of icons randomly selecting themselves without the screen ever being touched. This fix may also help with some of the other problems associated with the digitizer / screen on the S9920.

Before we begin, I must stress that this is a very tricky fix and requires you have a steady hand for soldering. I would only recommend you attempt this fix after exhausting all other avenues. Make a mistake and you could very well damage your phone beyond repair. Know that you do this at your own risk, and I cannot be held responsible if you kill your phone. With the disclaimer out of the way, let us continue.

The Phone Blues

A month after receiving my phone, the tell tale signs that something wasn’t quite right, began to emerge. Sometimes I would pick up my phone and the screen would not register my finger swiping the screen, while on other occasions the phone would act as if it had been possessed, randomly opening apps, usually located at the bottom right of the screen, close to the back button. The only way I found to make it stop, was to place the phone on a flat surface and run a finger firmly across the screen, directly over where the back button icon is located.

A few minutes doing this and the phone usually would begin to act normally. However these bouts of the ‘crazies’ were becoming ever more frequent, making the phone very unreliable for daily use. After contacting Dracotek, the company I bought the phone from. I explained the problem I was having with the phone and their representative kindly offered to replace the faulty handset. This really is a Chinese company with good customer support, in fact the best I have encountered. Generous as the offer was, after reading all the articles I could about the S9920, I realised that a replacement would only delay the inevitable, as the S9920 clearly has an inherent flaw in regard to the touch screen. After making sure I had a back up phone, I decided to try out a suggestion I found online while reading up on the S9920. This involved carefully disassembling the phone, detaching the screen and removing the motherboard. Then, with a fine tip soldering iron, reflowing several points on the rear of the board. Without any photos to go on, this possible repair was really a shot in the dark, but I decided I to give it a go.

Things you will need:

To perform this fix you will require:

1x 25watt soldering iron

1x Set of Phillips screwdrivers

1x Small flat screw driver or plastic separating tool, as used in opening iPods

Disassembling your S9920

First make sure your phone is turned off, then remove the back cover and the battery, place them to one side. Next using a small Phillips screwdriver, remove the eight screws from the rear of the phone. The bottom right screw is covered with a white sticker. By breaking this you will void the warranty of your device, which means you won’t be able to return your phone, so be certain that you wish to continue.

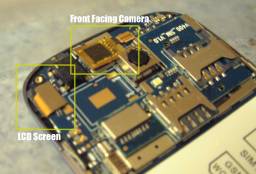

With the screws removed, pick up the phone and examine the edge. You should see a series of small slots, this is where you will need your small flat headed screwdriver. Start by inserting it into these slots and gently prying the plastic apart. The back and front of the phone should gradually come apart. With the back removed, carefully unclip the two connectors indicated in the photo.

The back and front of the phone should gradually come apart. With the back removed, carefully unclip the two connectors indicated in the photo.  These are for LCD screen and front facing camera. Before you go lifting out the motherboard, you will need to un-stick the power and volume buttons. First remove the plastic buttons that cover them. Underneath these are the switches, held in place with sticky back tape. Using a flat blade screwdriver, pry them away from the case. Remember be careful, replacement parts for the S9920 are scarce on the ground. Once you have them unstuck, lift the board up and away from the front case. You should now have the three parts of the phone laid out in front of you.

These are for LCD screen and front facing camera. Before you go lifting out the motherboard, you will need to un-stick the power and volume buttons. First remove the plastic buttons that cover them. Underneath these are the switches, held in place with sticky back tape. Using a flat blade screwdriver, pry them away from the case. Remember be careful, replacement parts for the S9920 are scarce on the ground. Once you have them unstuck, lift the board up and away from the front case. You should now have the three parts of the phone laid out in front of you.  The black rear shell, logic board and front case, which includes the LCD screen (See picture). Don’t lose the plastic home button, located at the bottom of the front case. Turn the logic board over so it looks like it does in the picture above. Now gently remove the yellow tape covering the solder points. There should be one large square at the top of the board and a smaller one at the bottom for the hardware button.

The black rear shell, logic board and front case, which includes the LCD screen (See picture). Don’t lose the plastic home button, located at the bottom of the front case. Turn the logic board over so it looks like it does in the picture above. Now gently remove the yellow tape covering the solder points. There should be one large square at the top of the board and a smaller one at the bottom for the hardware button.

A steady hand will be needed for the next part. Using a fine tipped soldering iron, heat up the contacts you uncovered from under the tape and ONLY those. Don’t spend too long with the iron on the board, you only need to warm up the solder enough for it to reflow. You should be able to see it happen. The solder will visibly change in appearance, becoming shiny.  The contacts pictured above are very fine and tricky, take your time and try not to rush. Once you have done these, finish off by reflowing the contacts you uncovered on the bottom half of the board.

The contacts pictured above are very fine and tricky, take your time and try not to rush. Once you have done these, finish off by reflowing the contacts you uncovered on the bottom half of the board.

*Additional note: After I reflowed all the contacts, I took a Stanley knife and scored the board between the tracks, just to eliminate the chances of bridges between the lines. You can do this as well if you wish, simply use a sharp blade and run it down between the contacts your have reflowed. Make sure you do not score the brown ribbon cable, it is fragile and will not react well to sharp blades.

Before you reassemble the phone, take a look at the board containing the hardware buttons (see picture). On my S9920 this narrow board was not sitting level, which meant it was not aligned well with the front case.  This could easily have attributed to the problems I was having with the back button. The board is stuck down with double sided tape, but can be lifted off by sliding a something under it. Apply a little pressure with a flat bladed screw driver or Stanley knife, be careful as the board does flex and you do not want to snap it. Once it is unstuck, carefully reposition it and press it down, the tape will keep it in place. You can see how mine was slanted in the photo.

This could easily have attributed to the problems I was having with the back button. The board is stuck down with double sided tape, but can be lifted off by sliding a something under it. Apply a little pressure with a flat bladed screw driver or Stanley knife, be careful as the board does flex and you do not want to snap it. Once it is unstuck, carefully reposition it and press it down, the tape will keep it in place. You can see how mine was slanted in the photo.

Well done! You have done the fix and hopefully your phone should behave a little better. Now you can carefully begin reassembling your phone. You can do this simply by reversing the process of taking it apart. Remember to be careful of the screen and camera cables as well as the volume and power buttons. Remember to place the home button back in the front case before you replace the motherboard.

Update

So it has now been five days since I took my S9920 apart in a last ditched effort to get the phone performing properly, specifically the screen. I’m happy to report the repair seems to have worked! So if your screen is acting up as described above, this fix might and I must emphasis might! Restore your phone to normality. I was dubious as to whether reflowing the contacts have any effect, but it has. I can only conclude the traces on the board are simply not very good or perhaps scoring the board as I did, isolated the tracks and stopped any bridging that might have been occuring.

That’s all for now folks, keep on geeking!

Latest Xbox Acquisitions

Posted: January 10, 2014 Filed under: Retro gaming | Tags: adventure, dnd, games, review, robocop, xbox Leave a commentYesterday I picked up two games for the original Xbox.

Dungeons & Dragons:Heroes and Robocop. After looking

online, I’m not expecting much from the latter, movie tie-ins

rarely work well. As the Xbox is now very much a retro console, it

seems only fitting to do a review on the good and not soo good

games of the original Xbox.

Capcom DuckTales Remastered

Posted: January 8, 2014 Filed under: Retro gaming, Windows | Tags: Capcom, ducktales remastered, NES, Nintendo, old skool gaming, platformer, xbox 360 Leave a commentOnce in a while a game comes along that makes you think “I have to play that!”. Such was the case with Capcom’s “Ducktales Remastered”, which gives the popular NES game of the same name a fresh new look, ready for high def. Developed by Wayforward, who are also known for developing Contra 4.

In this remastered offering, you play as Scrooge McDuck or one of his three nephews, Huey, Dewey and Louie, who embark on a classic adventure to exotic locations around the world, while searching for five legendary treasures. The stages are non linear, so it is up to the player to decide where to go. Levels play very much like any 8 bit platform back in the day, with the player exploring each level to pick up health, coins and defeat the various enemies that are out to stop Scrooge as he seeks his treasure.

Ducktales Remastered is a fantastic tribute to original 80’s platformer, as well as the original NES Ducktales game. Boasting hand draw characters and a lush 3D environment, you wouldn’t think it could get any better, but it does! Ducktales Remastered features the original Disney voice actors from the 80’s cartoon show, providing voices for in game dialogue. Did I mention the original theme tune has also been included in the game? While I have never played the original NES version, the remastered offering has every bit the feel of a classic platformer. For younger gamers who are used to Skyrim, Call of Duty or Asassins Creed, Ducktales may prove to be an acquired taste, but I believe the 5-10 year old will still get fun from playing these Disney characters. Older gamers will buy this games out of nostalgia or a long standing love for retro games. If like me, your a sucker for the cartoons you watched growing up, then this game will pander to your inner child. Ducktales Remaster is available on the Playstation Network, Xbox Live Arcade, Wii U shop and Steam.

The version I played was for the Xbox 360, which lends itself perfectly for this platformer. The controls are smooth and sharp, ten minutes in to the game and I found myself humming along to the Ducktales theme tune, completely absorbed in to my game. It is said the development team poured their heart and soul in to this Ducktales and it really does show. The love for the material radiates straight off the screen. From the music score, down to Scrooge’s chest going up and down to indicate he is breathing. The level of detail which has been put in to Ducktales, makes it one of the best games I have bought in recent years.

Credit must also be given to Capcom, for being faithful to the original game and producing a quality title that does much for the Ducktales franchise. A lesser publisher might have been tempted to cut corners, to simply cash in on the name. Something that often happened in the 80’s and 90’s, much to the frustration of Nintendo and Sega gamers alike. Any one remember Total Recall for the NES or E.T for the Atari 2600? Nuff said!

All images featured are the property of the respected owners

TenFourFox Developer Explains All

Posted: January 8, 2014 Filed under: Uncategorized | Tags: 10.4fx, apple, g4, ibook, TenFourFox, web browser Leave a commentToday ByteMyVdu Interviews Cameron Kaiser, the man behind the Apple browser TenFourFox or 10.4Fx, the web browser that breaths life back in to aging G3-G4 Apple Computers.

Where did the idea of TFF originate from and why?

I was, for many years, a very loyal Camino user even back to the days when it was Chimera, migrating there quickly from Mac IE. I’ve favoured Mozilla browsers because of the open way Mozilla conducts their affairs, even if I sometimes disagree with the decisions they make, but Firefox in those days was only somewhat Mac-like (a deficiency which still persists to some

degree owing to XUL’s cross-platform nature, making it a jack of almost any platform but a master of none), and Camino gave me a Gecko-class browser in a very pretty native widget wrapper. [For your readers who don’t know,

Gecko is the name for Firefox’s underlying layout engine that downloads,

processes and displays the page.]

However, the Intel transition had come and gone and by 2010, Mozilla announced they would drop both 10.4 and PowerPC for “Gecko 1.9.3” (what would become Firefox 4). This would almost certainly cause Camino to do the same for what was planned as Camino “2.2” and the Camino developers had already been talking on IRC about dropping it. Camino was hard to develop because of changes

Mozilla made internally which favoured Firefox, and it was getting harder, making any reduction in their workload welcome. It made the most sense at the time, then, to try and keep the Gecko platform alive. It might even be possible to graft it into an old shell Camino that still worked in 10.4, so I figured that I might be able to squeeze one more release out of Firefox. The timing was right, because Firefox 4 was shaping up to be a major update over Firefox 3.6 with lots of improvements to HTML5.

There wasn’t really anything altruistic about it then or now. I wanted a browser that was current, because my quad G5 was only four years old then and I had bought it not too much before Apple announced the Intel switchover, and it had to be for 10.4 because I still use Classic applications a lot. I had also learned from working on Classilla that trying to backport a browser long after the code has advanced is really, really, really hard, so I needed to make the jump as quickly as possible or the browser would fall behind and never catch up. The fact that it works well for many people is, admittedly, secondary — I’m glad lots of people find it useful, but *I*

needed it!

On November 8, 2010, I finished porting Firefox 4.0 beta 7 to PowerPC 10.4 and released that as TenFourFox, since it was already somewhat divergent internally from the regular Firefox Mozilla was shipping for Intel 10.5 (it’s a long story, but Firefox’s trademark requirements are very strict and I didn’t want to run afoul of them). At that time I didn’t even ship separate G4 and G5 builds; it was a G3 and an “AltiVec” build, which proved inadequate, and by beta 8 I shipped the standard set of four that we continue to offer to this day. Porting Firefox early instead of waiting for Camino to catch up turned out to be absolutely the right call. In 2011, Mozilla ended what they called “binary embedding” which was the major way Gecko was glued into the various native-widget browsers such as K-Meleon and, of course, Camino. There were a lot of reasons for this, some good and some less good, but it was detracting from their core work on Firefox and it had issues (as the Camino developers would attest). This was why Camino tried to move to WebKit first, since they would not be able to effectively embed Gecko anymore (or would be essentially just a skin on Firefox), and then eventually just gave up.

Did you face any challenges while developing TFF?

Porting 4 was a lot of work, but mostly tedious work rather than really hard work, which involved restoring much of the 10.4 code Mozilla had stripped out and figuring out how to get around the font problems since 10.4 has a very deficient “secret” CoreText and Mozilla no longer wanted to use ATSUI (for good reasons — ATSUI can be temperamental). They solved this latter problem mostly themselves, by the way, by embedding an OpenType font shaper called Harfbuzz and I just made it use that, which works for about 95% of the use cases. With these and other things fixed and code added back, it could be easily built with existing Xcode tools and using MacPorts for the gaps. In that timeframe I also wrote the first-ever PowerPC JavaScript just-in-time compiler using the partially written one Adobe had for the now defunct Tamarin project (learning about the G5 assembly language quirks Apple never documented the hard way), and started conversion of the WebM video module to AltiVec.

The first problems came when jumping to Firefox 5. Mozilla was moving to their new “rapid release” system which, while requiring me to adhere to their rigid schedule, was a relief in some ways because they couldn’t do a lot of damage between individual releases and the fixes could be laid in incrementally. So that was more a blessing than a curse, even though it looked really bad in the beginning, and I still have to keep to the upgrade treadmill even now.

The second Firefox 5 problem was more severe. Apple, of course, had not done anything with Xcode 2 after Tiger support ended. Mozilla wanted to merge everything into a superlibrary called “libxul” (on OS X, it’s called “XUL”) which took most of the individual dynamic libraries and allowed them to more effectively connect with each other. Fine, except the Xcode 2.5 linker crashed trying to create it because it was too large, I couldn’t get it small enough, and so I eventually figured we were dead and would live out

our lives with a Firefox 4 port. Fortunately, Tobias Netzel stepped in with his port of the Xcode 3 linker to 10.4, and we were in business again.

In Firefox 10, Mozilla ended TraceMonkey support, our first JavaScript compiler (or JIT). Writing a JIT from scratch, especially one with poor documentation, is real drudgery and I was very worried we would not make it on time. Again, another new contributor came to the rescue; Ben Stuhl got it to compile and we alternated back and forth to get it finished for the first Extended Support Release of Firefox, which became TenFourFox 10. This first JIT that we wrote ourselves became PowerPC JaegerMonkey, Mozilla’s code name for this more advanced JIT.

Between 10 and 17, more and more code required more and more complex and ugly workarounds for the aging compiler we were still using. Tobias had done some initial work with getting later compilers to build OS X PPC compatible code, but we encountered another major linker issue when trying to build 19 with the new compiler. This turned out to be a bug in the Xcode linker Apple never fixed that Tobias figured out, and we were able to jump to the new compiler for TenFourFox 19. As a side benefit, some other PowerPC-compatible software packages that were relying on our tool chain work could now build later versions too.

The biggest two challenges right now are finishing yet another JIT, and supporting Firefox’s new Australis interface. The first part is partially done and a baseline JIT is in TenFourFox 24 called PPCBC (PowerPC Baseline Compiler). It doesn’t generate code even as good as old TraceMonkey, but it does it considerably faster than even TraceMonkey did, so the browser becomes more responsive. The next step will be to finish IonMonkey, the secondary optimizing compiler, which still has several more months of work to go and it took nearly seven months of work to get it this far, but it’s starting to finally reap dividends. I just hope that Australis doesn’t break

us or require dependencies on features only in 10.6.

What is it about the older Apple hardware that drives you to write software to support it?

Simply, it’s because I still use it. I think Power Macs were built better, and I like that they are a bridge to the past: I have a big investment in Classic software, and I think that 10.4 is still Mac-like enough. I don’t like the direction Apple has taken, especially with 10.7 and later versions, to say nothing of my objections to Intel.

I also have a fondness for PowerPC because it descends from the old IBM POWER and RS/6000 machines, and I used to do a lot of work with them.

Apple made the transition to Intel in 2006, do you think there is a perceivable difference aesthetically, between PPC and Intel Apple Computers. E.G Do you think people will be writing browsers for their outdated Macbooks ten, twelve years from now?

I think it’s safe to say that the Power Macs are in some manner approaching their limits, with the possible exception of the very late G4s and G5s. My quad, for example, strains to surpass my Core 2 Duo mini from 2007. It can surpass it, of course, and on some things it runs rings around it, but it consumes a comparatively prodigious amount of power to do so and it won’t surpass a Mac Pro of the same generation. Today the quad is an eight-year-old design (they rolled out in 2005). You can’t make it perform as well as an i5 or an i7 anymore.

So, even if it becomes technically possible, it wouldn’t be a lot of fun to, say, use JavaScript MESS or anything on even the last and mightiest of the G5s. But I think people will still use them for certain particular tasks; they still run compatible versions of Photoshop just as well as they did, and they still run AppleWorks or iWork. And it will be convenient to have some sort of browser to have there with them. Myself, it still does almost everything that I bought it for, so I’m still using it, and a browser make them still feasible as a daily driver even today.

I don’t think the Intel machines will engender quite this level of loyalty, however. The PowerPC’s detractors have said that it was always more of a talisman or a promise of future performance Apple never delivered upon, or that it was more just a way for Apple to distinguish itself rather than a computing architecture they really believed in. I think that’s simplistic and, particularly during the days of the 604 and G3 when PowerPC stomped x86 chips of the same generation flat, completely wrong. But even in the later days of the architecture when Intel finally gained the upper hand, it really was something more special and elegant than everyone else was using

and allowed Macs to stand out, and because Power Macs can still run old software, people hang onto them “just in case” in a way they don’t really hang onto old Intel Macs (I’ll expand on this more in the next question).

And really, after the transition, lots of people have concluded that since the architecture is the same, Macs are merely just glorified PCs with nice design and some weird hardware features. Rather than make the effort to write something like a “TenSixFox,” just slap Windows or Linux on it and keep going. Apple certainly doesn’t keep any links to the past anymore, so why bother? There is much less love for something that is much less unique.

Carrying on from the last question, do you think the transition created two separate camps of Apple user, one PPC and the other Intel?

Yes, but for more complicated reasons. Jumping from 68K to PowerPC, Apple had a tremendously more powerful architecture to move to, and they made it painless with the built-in 68K emulator. There was no reason not to go PowerPC, and Apple even built 601 cards for the later 68K models, as you’ll recall. They ran almost everything you ran on your old Mac II’s and Quadra’s. You can still run 68K software in Classic, for crying out loud. It was a very natural evolution that left nearly no one behind.

When Apple was talking about the Intel transition, though, most people didn’t know that Core was right around the corner — instead, we were still dealing with the gluttonous Pentium 4, which did not have the clear and dramatic performance advantage over G5 that the PowerPC 601 did over the 68040, and those first Apple developer machines had NetBurst P4s in them. Even the first Core Solos were, in retrospect, underwhelming. The quad and late G5s could still beat them in a way the 68040 couldn’t top its successor.

Only by changing the playing field a bit did they show clear advantages.

And then there was Rosetta, which unlike the amazing 68K emulator which operated at such a low level that it was nearly magical, could not run G5 code, could not run Classic applications (even PowerPC ones, let alone 68K code), could not run Java within translated applications, could not run kernel extensions (yes, the 68K emulator could run CDEVs and INITs), … on and on. I suppose we should be thankful it could run Carbon apps, at least.

Rosetta’s quirks drove the first wedge in. If you wanted to have full compatibility, you needed to keep a Power Mac around. Apple removing Classic support from 10.5 made this even more clear to say nothing of removing Rosetta from 10.7. Even later PPC apps that were compatible with Rosetta may have no peer in 10.7 or may be insanely costly to re-buy. I don’t think I’m speaking just for myself when I say that this transition left a lot of people behind, and I think the same ones of us who cling to our Power Macs are the same people who eagerly left our 68K Macs behind in 1993. There was little cost to change then but there’s a big cost to change now,

particularly if you’re like me and have a large investment in Mac software going back decades.

I can’t blame Apple for the jump, even if I don’t like it. The gamble clearly handsomely paid off, despite its warts, and IBM was only too happy to get out of the desktop processor business for good. But the transitions have glaring differences between them and Apple apologists ignore them at their peril.

What is your most loved computer?

I’m assuming you mean a Mac here, and while it’s hard to pick, I’d have to say the 12″ iBook G4. I like my iMac G4, but it’s poky, even considering its age. I like my quad G5, but it can be loud and heat up a room. My iBook is tough and reasonably easy to work on, travels well in a small case, and I have spare batteries and parts to last it for years. With aggressive power management and screen dimming I can wring five to six hours out of the battery pack, and the 1.33GHz CPU is more than adequate for PowerPoint presentations, moderate browsing, music and DVDs.

Finally, what is your goal for TFF?

To keep it alive and reasonably current. At some point the wheels fall off and I can’t keep it moving forward with Firefox (possibly when Mozilla drops 10.6 support, which would be a lot of old-style legacy code), and it becomes like Classilla, a legacy browser that still gets some updates and patches even if it isn’t technologically cutting edge anymore. Ultimately I’d like to get at least 10 years life out of my quad as my primary computer and then take stock then. To do that given the current pace of web evolution, I’d need to keep TenFourFox current with Firefox until around 2014 or so. That should be eminently doable, and even after the wheels do fall off, there

will still be updates and security fixes. And hey, if we’re still keeping up in 2014, I don’t see any reason to stop.

When Cameron isn’t working on TenFourFox, he can be found maintaining http://www.floodgap.com, a website catering for retro computing with an impressive archive of information on Commodore’s range of 8 bit computers.

I’d like thank Cameron for his time and insight and highly recommend you check out his website via the link below

Links

TenFourFox

http://www.floodgap.com/software/tenfourfox/

Floodgap